I honestly only made it a few minutes in, and there is probably plenty of merit to the rest of her perspective. But… I just couldn’t get past the “AI doesn’t exist” part. I get that you don’t know or care about the difference and you associate the term “AI” with sci-fi-like artificial sentience/AGI, but “AI” has been used for decades to refer to things that mimic intelligence, not just full-on artificial general intelligence. Algorithms governing NPC behavior and pathfinding in video games is AI, and that’s a perfectly accurate description. SmarterChild was AI… even ELIZA was AI. Stuff like GAN models and LLMs are certainly AI. The goal posts for “intelligence” have moved farther and farther back with every innovation. The AI we have now was fantasy just 20 years ago. Even just five years ago, to most people.

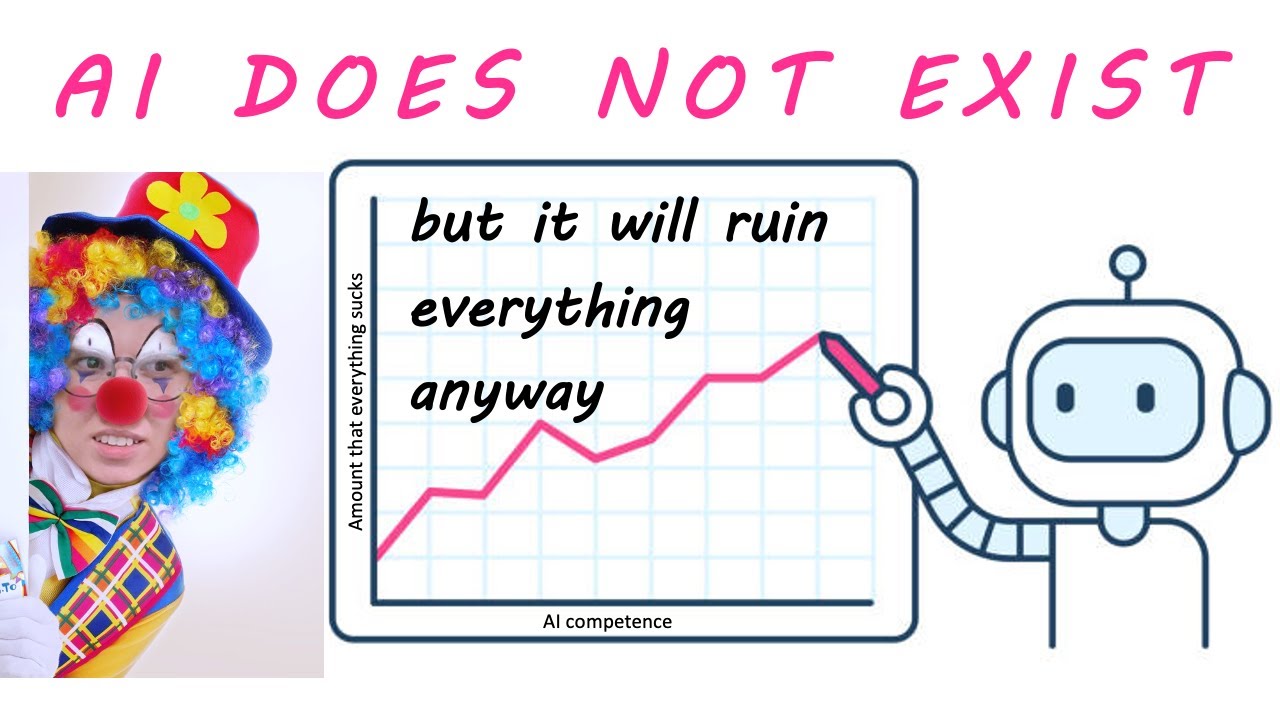

Video: AI doesn’t exist. It is the super-category for machine learning, something that does exsit. In my next video I will prove water does not exist by showing you pictures of wet kittens.

I tuned out when she said AI doesn’t create anything new.

right, that’s only true in the actually literally completely true sense. I have a love hate relationship with AI, I use several of them. As far as I’m concerned AI stands for “assets and inspiration” because that’s the only use for it. It can generate royalty free assets to chop up and use in art, and it can help brainstorm ideas if you’re feeling uninspired, but it’s actually really terrible at creating anything new or interesting on it’s own. There’s a difference between art and content. And what AI generates is content. Stuff to fill space. It’s not going to replace novelists any time soon, but if you make your living writing meaningless ad copy that just fills space then your job is in serious trouble. It can’t create art because messaging is a fundamental part of art and AI has nothing to say. Artists and writers do a lot more than just regurgitate their influences and copy patterns. They also have a point to make. When you engage with a piece of art, the artists is trying to say something, make commentary on the world, or evoke a specific emotional response in the audience. There is intention in art, but “AI” in it’s current and likely in it’s future state is incapable of approaching any task with intention. It’s just a machine learning tool spitting out formulaic patterns. It’s great if you want to create 250 stylized variations of the letter “B”, so artists can use AI to speed up their brainstorming phase and thus it can be a useful tool for artists, but it will never create anything new or interesting without a heaping helping of human interference.

AI will be writing shitty superhero movies that fill up space for the next thousand years, because those kinds of movies are just content. Aesthetically pleasing content, but still just content. It’ll never write anything that you haven’t seen a hundred times before.

I wish I could upvote you multiple times! This is the most accurate and well explained explaination of the current state of things. You nailed it about the assets too.

It’s a 1hr argument in semantics, which is fun I guess. I don’t necessarily agree, but I appreciate the perspective she adds.

Interesting take from a physicist’s point of view, although she strays somewhat out of her area of expertise.

Which she readily acknowledges in the first two minutes of the video, right before explaining the role machine learning plays in physics. It’s definitely worth watching the whole video. She’s one of my favorite science communicators because she’s pretty transparent about which topics are within her area of expertise and which topics are on the peripheral.

I love this channel! Her discussions on the toxic culture in physics departments was both heart breaking and really brave. That said I had a feeling this particular video might be a bit out of scope, but I might give it a try since it sounds like y’all enjoyed it

(Random, but wanted to add that her rant about robots in movies being bad tools is hilarious)

I love her, I’m sad the video is getting hate in here but I haven’t watched it yet

Honestly I’m not surprised. Lemmy has a lot of very literal

well actuallytypes. Many of them seem more mature and older so at least partially aware of themselves. But in general it still feels like a systemic issue. Wouldn’t be surprised if anyone here would be the type to respond to the video on robots in movies with something likewell, duh! robots _have_ to look like that because of the writing. It humanizes them.Just totally missing the fact that she’s clearly a very intelligent person and is very aware of the basic facts of the situation just like everyone else isYeah I watched about half of it, it is a long one. She very clearly defines what she means and states her reasons and opinions.

I agree she is not a Comp Sci Doctorate in Machine Learning but she doesn’t claim to be.

Seems to be a good video on a contentious topic and whether people agree or not I don’t think she was misrepresenting anything.

she is awesome. I think she’s the most engaging science communicator i’ve found in years, and it’s because her videos are so earnest and stripped down. She’s really awesome.

This reminds me of the Adam ruins everything guy talking about how LLM’s are the next Segway.

Dude’s an idiot, it’s not going away, it’s going to integrate with everything in the next 50 years.

It won’t go the way of segway. It’ll go the way of FLASH. Flash was a great tool for creating all sorts of engaging content, but the reason that it went away is because of the sorts of people that adopted it and how it was used. By that I mean advertisers. So people began to associate flash with sketchy advertisements, scams, and obnoxious web design. So even though it was great for all sorts of things, people abandoned it because the people who used it the most were making crap. AI will go like that. Not all AI is equal and some of it will be around forever, but I suspect that most LLMs will run their course because they’re going to be used by advertisers and other ne’er do wells to make cheap content cheaply and people will get sick of that pretty fast.

Flash went away because other better alternatives appeared. And then iPhone launched without support for it, which pushed the drive to move away from Flash.

Got about halfway through it and does she explain her position of ai doesn’t exist better ? Seems like she just uses that example of cats at the beginning then goes on with the rest of the video being a general overview of the limitations and dangers of ai/ml. Would be nice if she defined what she thinks intelligence is. The rest of the video seems fine, albeit well tread by now, but her insistence of “ai doesn’t exist” needs more explanation.

Her argument is that AI doesn’t exist because it doesn’t have the same comprehension level as humans, which is just an argument of terminology.

Then she proceeds with all the weaknesses of AI, which are mostly valid arguments, but not what the title of the video is about.

Then she discusses about which muppet should play which Star Trek TNG character, which I have no complaint about.

I don’t even know what the real reason to create true AI would be other than curiosity. Wouldn’t true AI just be like a human being? We can make human beings all day long! It’s more fun, too!

Yes but it’s a human being you don’t have to pay a living wage, or listen to it complain it wants let put of the office to see it’s family.

That true AI would also be faster than a human. That alone can make it much better for many things.

All that means to me is that it could be dumb faster.

Here is an alternative Piped link(s): https://piped.video/EUrOxh_0leE

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source, check me out at GitHub.

I agree with her standpoints about the danger of AI, but I also think she ignores important applications of AI.

Of course AI shouldn’t be used for life and death decisions. If there’s even 0.1% risk someone can get harmed by the AI, then it shouldn’t be used (on its own).

But not all applications are life threatening. For example, you can use computer vision to determine the quality of apples. If the apple is bad, it will be immediately disposed. This can reduce the amount of bad apples being shipped to the grocery store, which in turn reduces cost.

Is it terrible if the AI misses 20% of the bad apples? Not really. Those apples would’ve been shipped anyway without the AI.

If you’re ok with some error, then you have a case for AI, and many industrial applications are like that.

How do you feel about the self driving car use-case? Say for example a self driving car has a 0.5% risk of an accident, and thus human harm, in it’s usage lifetime, but a human driver has a 5% risk of an accident (making numbers up for the sake of argument but let’s say the self driving car has a 0.1% chance of harm or greater but it’s still much lower than a human). Would you still be against the tech and ven though if we disallowed it there would statistically be more harm caused?

If it can be proven that it causes less accidents, maybe.

My fear is that the accidents can be systematically triggered. For example, one particular curve the AI has trouble understanding. Or a person standing in one particular corner causes the AI to completely misrepresent the scene. Or one particular color of a car makes it confused.