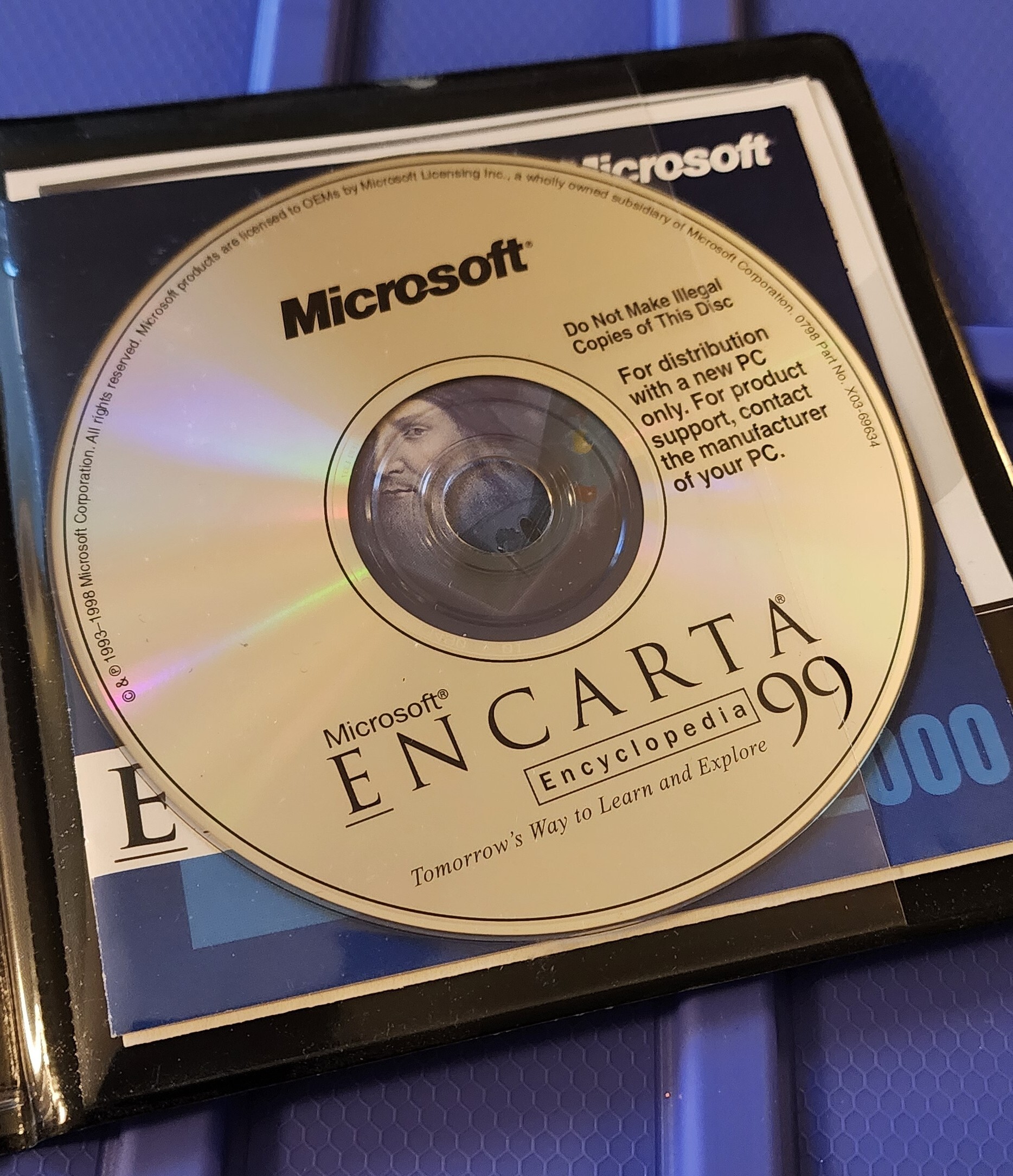

Not needed, I’ve got this gem.

Y’all can look, but don’t touch.

Fun fact: you can download llama3, an llm model made by meta (which is surprisingly good for its size), and it’s only 4.7gb. A dvd can store 4.7 gb of data, meaning you could in theory have an llm on a DVD.

…disc 1 of 150

Technically possible with a small enough model to work from. It’s going to be pretty shit, but “working”.

Now, if we were to go further down in scale, I’m curious how/if a 700MB CD version would work.

Or how many 1.44MB floppies you would need for the actual program and smallest viable model.

Might be a dvd. 70b ollama llm is like 1.5GB. So you could save many models on one dvd.

squints

That says , “PHILLIPS DVD+R”

So we’re looking at a 4.7GB model, or just a hair under the tiniest, most incredibly optimized implementation of <INSERT_MODEL_NAME_HERE>

llama 3 8b, phi 3 mini, Mistral, moondream 2, neural chat, starling, code llama, llama 2 uncensored, and llava would fit.

Thought that said cbatGPT

Please keep us updated with all future inconsequential misinterpretations.

-Management